Team:St Andrews/project/ethics/communication

From 2010.igem.org

| Line 42: | Line 42: | ||

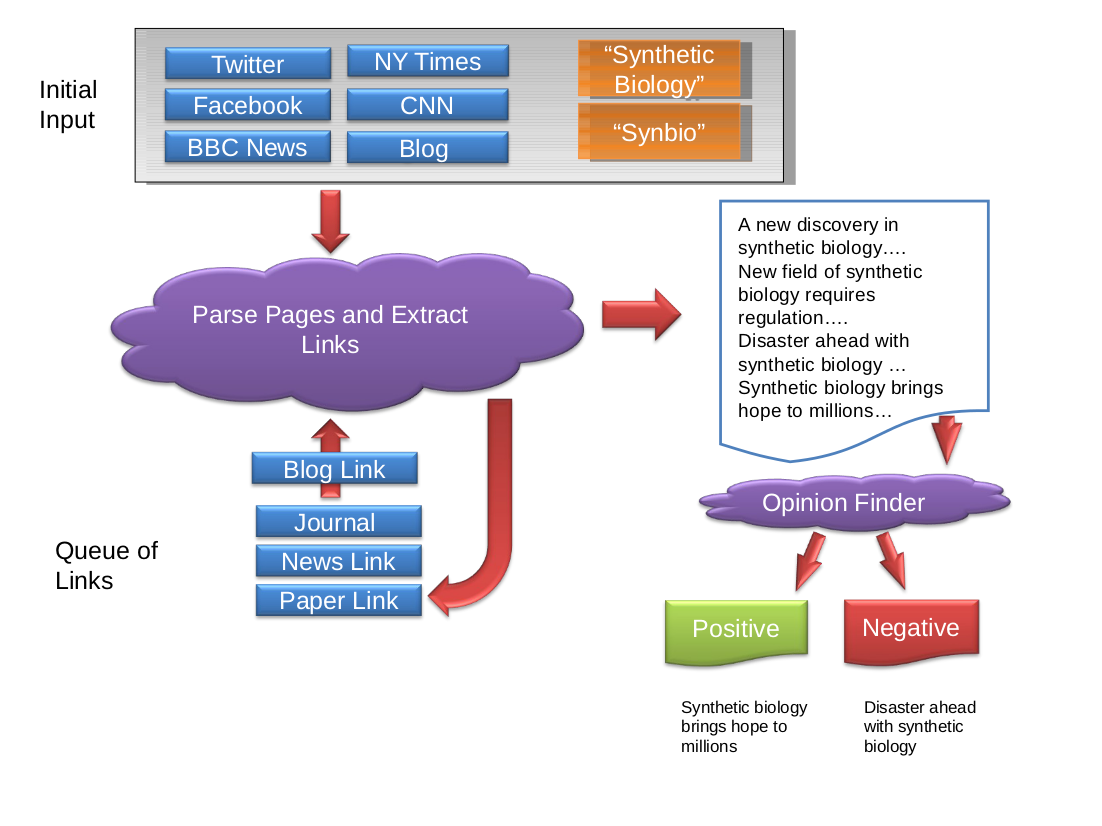

The following is a visualisation of the cralwer algorithm: | The following is a visualisation of the cralwer algorithm: | ||

| - | [[Image:StAndrews Crawler.png]] | + | [[Image:StAndrews Crawler.png | 800px]] |

==Implementation== | ==Implementation== | ||

[[:St_Andrews/project/ethics/communication/code | Python Implementation of the Algorithm]] | [[:St_Andrews/project/ethics/communication/code | Python Implementation of the Algorithm]] | ||

=Results= | =Results= | ||

Revision as of 21:14, 27 October 2010

Communicaiton

Contents |

Premise

Realtime Internet communication is increasingly common, the so called facebook generation are growing up acquainted with a dizzying array of instantaneous comunication methods. The inception of email was heralded as a revolution in communication, today the quantity of email traffic is at an all time low. In place of email instant messaging and social network messaging have come to precidence. Combined with the vast quantities of blogs, forum posts, wikis and other forms of user generated content the volume of publically accessible communications is immense. From a human practices perspective this provides a vast and frequently changing dataset which gives insight into how people communicate.

Given a moderately controversial topic such as synthetic biology this allows us to investigate a number of different metrics and trends relating to its appearance in communications. By gathering communications over a period of time we are able to investigate the following phenomena:

- How popular is synthetic biology in relation to search terms associated with popular culture (football, lady gaga, politics etc)?

- How popular is synthetic biology in relation to traditional sciences?

- How popular is synthetic biology in relation to other domain specific sciences?

If the collected data is parsed using a natural language analysis library such as the excellent [http://www.cs.pitt.edu/mpqa/opinionfinderrelease/ OpinionFinder] from the University of Pittsburgh one can draw even greater conclusions. Although the use of natural language analysis software does not guarantee accurate analysis of language it provides a good attempt. Identifying "Synthetic biology is good" as positive and "synthetic biology is evil" as negative is not a challenge, however "systems biology is excellent, unlike synthetic biology" and "synthetic biology is good at being bad" pose a greater challenge. However given training and the methodical checking of selective sets of output lessens the risk of spurious output. We are able to acquire a set of numerical figures representing the overall opinion of each collection of data. Given this we are able to analyse data gathered over a period of time to investigate the following:

- The standard deviation of opinion of synthetic biology, is opinion consistent or frequently changing?

- The standard deviation of opinion of synthetic biology compared to the standard deviation of opinion of traditional science, how do the consistence of opinions differ?

- The standard deviation of opinion of synthetic biology compared to the standard deviation of opinion of elements of popular culture, how do the consistencies of opinions differ?

Technical Solution

Theory

Before one can reap the benefits of having access to such a great pool of data one must answer the challenge of collecting this data. The web stores exobytes of data, hence collecting and parsing the entirety of the available data is simply not an option. However this is not required, when interested in a set of related terms such as {synthetic biology, synbio, igem} one can disregard large portions of the web. Furthermore if one is considering gathering data relating to social communication, then a number of start points quickly become apparent. Firstly serveral social networks offer a fairly standard XML based API and secondly virtually every so called web 2.0 site organises data via some form of chronological hierarchy (be it through metadata or simply via the removal of old articles from the home page of the site). These two features of the web allow for us to deduce a simple algorithm for collecting data. This algorithm would start at a number of popular hubs of discourse (such as large news sites, social networks, newspapers, journals, blogs etc) and procede to continue through every site linked from each site so long as a term of interest is found. Given sufficient running time this algorithm will crawl through all sites which have a path from any of the original sites. Given a sufficiently widespread set of start sites, this algorithm will encompass all sites on the web. This is not always required or (when using a remarkably generic search term) feasible and thus one may wish to impose an artificial limitation. Thus this algorithm will perform a best attempt to acquire as large a quantity of timely social communications regarding a chosen subject.

The pseudo code of the algorithm is as follows:

crawler(searchterm):

links = Stack S

S = {facebook, twitter, bbcnews, cnn, foxnews, guardian, times, nytimes .. myawesomeblog}

while S is not empty or arbritary threshold:

crawlerparser(S, searchterm)

crawlerparser(links, searchterm):

for each link in links

results = results += link containing searchterm

for each result in results

if result is old /* either is result an earlier dated result file or metadata identifies as old */

disregard

else

add all hyperlinks in result to S

output result-$(date)

This algorithm will output list of phrases extracted from the web pertaining to the search phrase. This output is then redirected to a file and serves as the input for OpinionFinder to analyse.

The following is a visualisation of the cralwer algorithm:

Implementation

Python Implementation of the Algorithm

"

"