Team:Heidelberg/Modeling

From 2010.igem.org

(→Model description) |

(→Characteristic of the Network) |

||

| Line 79: | Line 79: | ||

===Characteristic of the Network=== | ===Characteristic of the Network=== | ||

| + | |||

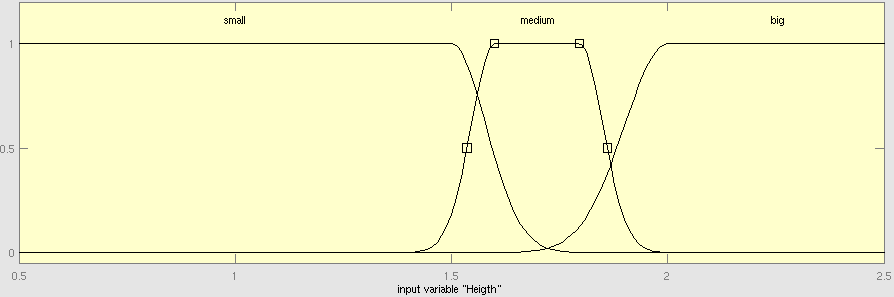

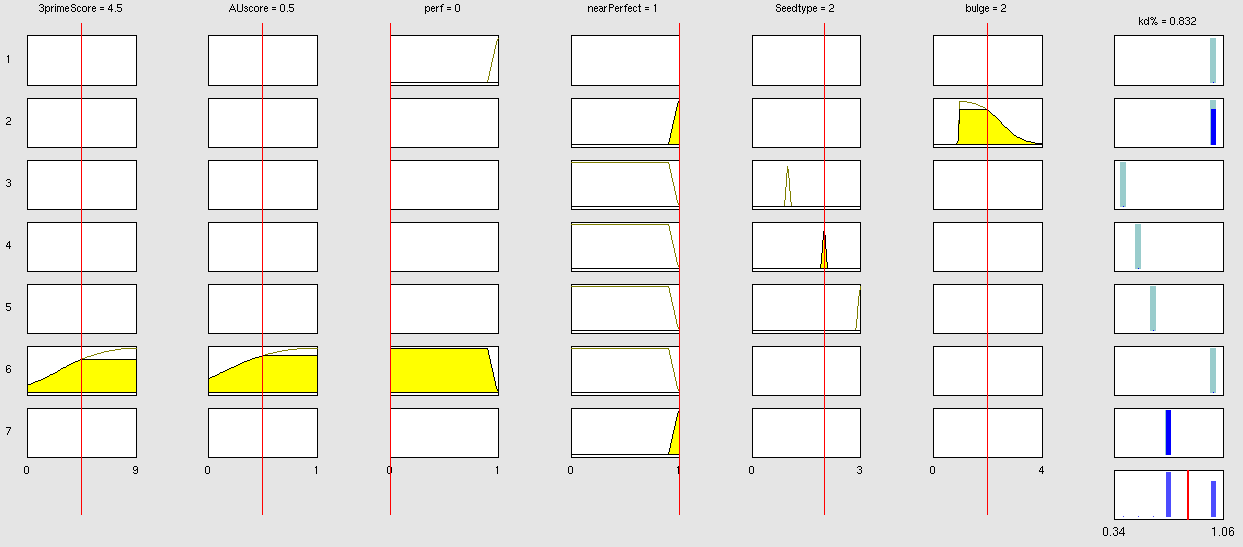

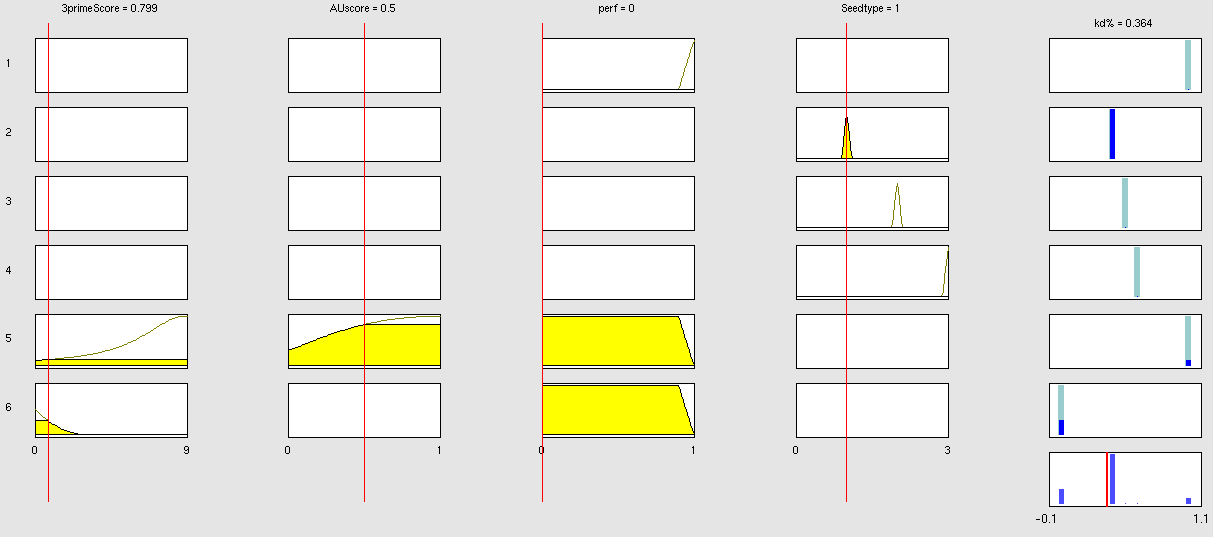

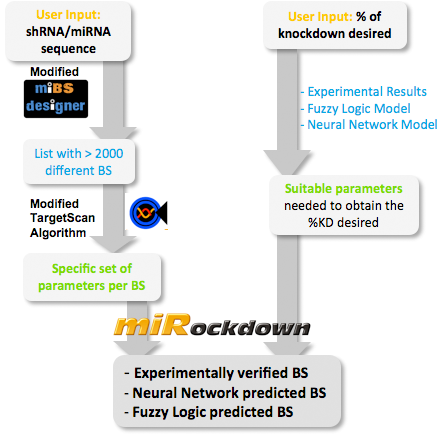

| + | The neural network comprised two layers (multilayer feedforward Network). The first layer is connected with the input network and it comprised 15 artificial neurons. The second layer is connected to the first and it produced the output. For the first and the second layer a sigmoid activation function and a linear activation function were used respectively. The algorithm used for minimizing the cost function (sum squared error) was Bayesian regularization. This Bayesian regularization takes place within the Levenberg-Marquardt algorithm. The algorithm updates the weight and bias values according to Levenberg-Marquardt optimization and overcomes the problem in interpolating noisy data, (MacKay 1992) by applying a Bayesian framework to the NN learning problem. | ||

| + | |||

===Results=== | ===Results=== | ||

Revision as of 16:04, 26 October 2010

|

|

||||||||||||

"

"